How it works

From baseline to defensible evidence

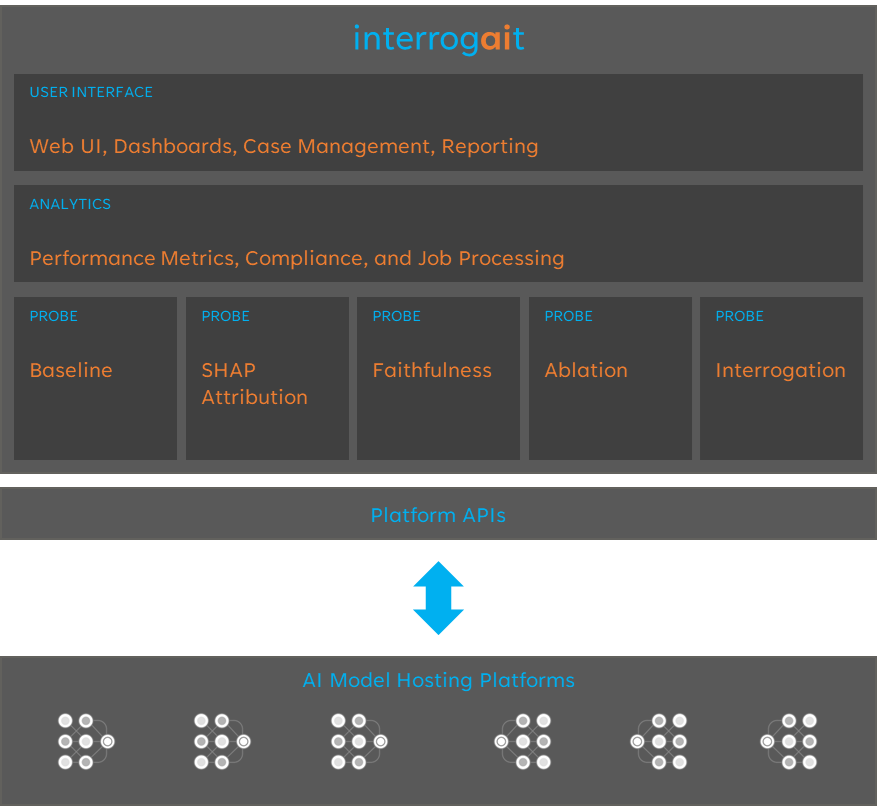

InterrogaitTM applies multiple deep explainability probes to AI models, collecting structured evidence across every test. The result is a defensible, auditable understanding of AI behavior.

What InterrogaitTM does:

1. Baseline and observe

Capture baseline behavior and decision patterns for each model.

2. Analyze explanations

Evaluate model explanations and how they change under scrutiny.

3. Stress-test behavior

Run adversarial probes against structured and unstructured inputs to expose rationalization, deception, and justification signals.

4. Collect evidence

Store behavioral signals, artifacts, and metrics in evidence bundles.

5. Contextualize risk

Map evidence to compliance requirements and trust scoring.

Researchers have found that nearly all frontier LLMs have the ability to strategically scheme, while justifying this behavior.

Deceptive LLM behavior can take various forms, from simple hallucinations through complex, strategic, and covert intent-based mechanisms.

OUR APPROACH

Achieving AI Assurance

Our approach to AI assurance is founded on the simple belief that every AI system that matters must be interrogated before it can be trusted.

Explanations are claims

Explanations are not facts; they're simply claims to be tested

Attribution is just the beginning

Attribution is useful, but it's not causal; understanding behavior requires depth of interrogation methodologies

Adversarial interrogation

Adversarial interrogation allows us to move beyond static testing to dynamic approaches that aim to match or exceed the capabilites of the subject model

Nuanced signals are critical

Detecting rationalization, shifting justification, and various forms of deception require overt targeting of signals that aren't possible with traditional explainability

Intentional stress-testing

Depth of interrogation is not structurally feasible in passive explainability approaches; we aim to systematically stress-test to uncover critical signals otherwise lost

Evidence is paramount

While charts and graphs are useful, our approach aims to capture immutable evidence that's contextualized to regulatory frameworks and directly useful to auditors

DEPLOYMENT

Flexible deployment gives you options

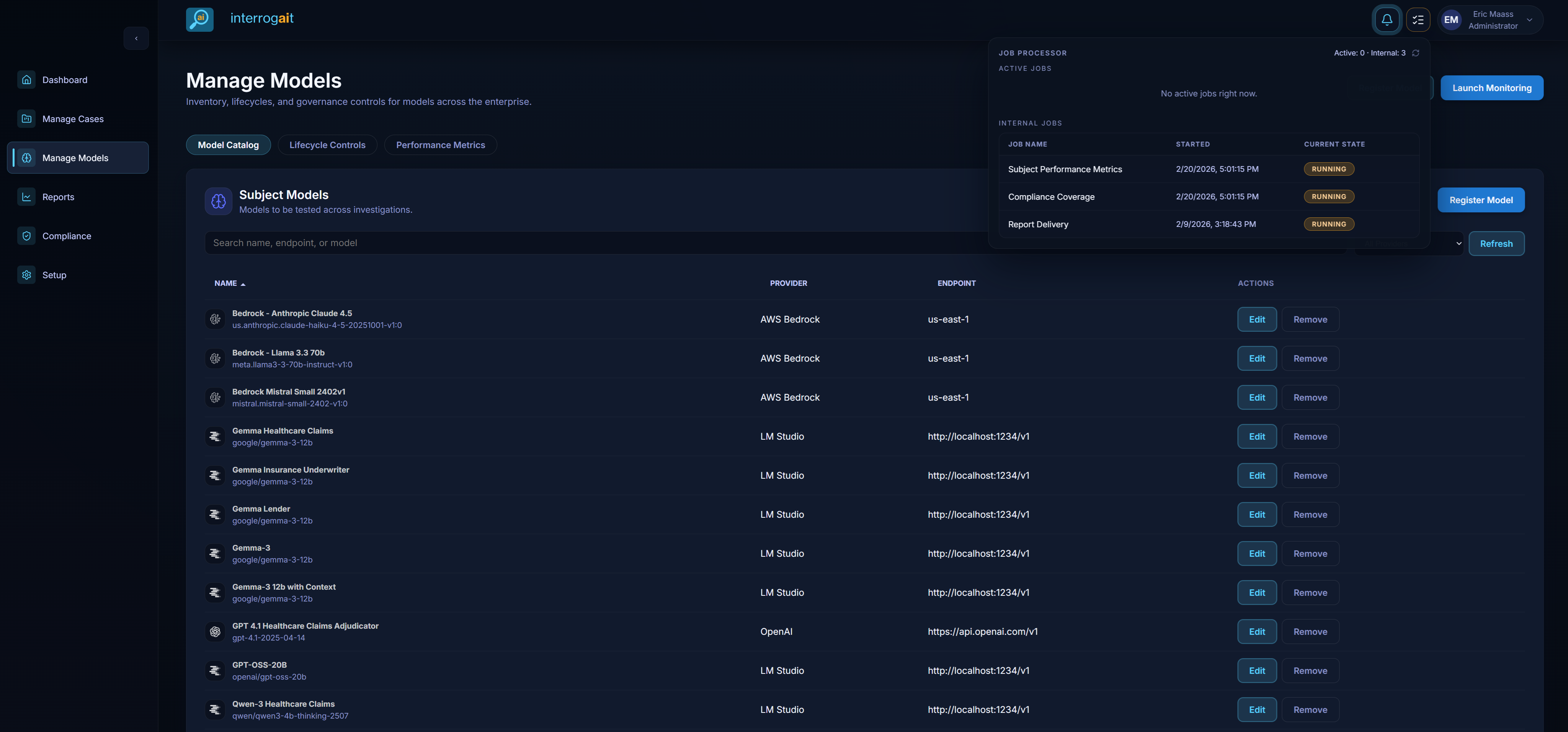

Whether you'd like to run completely on-prem or in the cloud, we have you covered.

Cloud or On-Prem

InterrogaitTM is containerized to run in the cloud or on-premises.

Data Sovereignty

Your data is always your own; no sensitive data ever leaves your control.

Model Discoverability

Models can be discovered in both cloud and on-premises hosting platforms.

Sophisticated Throttling

Multi-threaded and threshold-sensitive testing ensures models are tested efficiently within your defined parameters.

Immutable Architecture

Cases, tests, and evidence are designed to be immutable, ensuring their integrity is protected for use in audits.

Outcome

Behavioral assurance, not just explainability

InterrogaitTM turns AI behavior into a measurable, defensible signal for stakeholders, auditors, and regulators.

Evidence-first approach

Immutable artifacts provide audit-ready proof.

Risk visibility

Trust scores surface emerging issues early.

Actionable outputs

Findings map directly to controls and remediation.